Windows Thread Synchronization 42

Synchronization with Spinlocks Program Example

In the previous example, as noted, you still have no synchronization between the two threads. The output may still be out of order. One simple mechanism that offers synchronization is to implement a spinlock. To accomplish this, a variant of the Interlocked function called InterlockedCompareExchange() is used. The InterlockedCompareExchange() function performs an atomic comparison of the specified values and exchanges the values, based on the outcome of the comparison. The function prevents more than one thread from using the same variable simultaneously. The InterlockedCompareExchange() function performs an atomic comparison of the destination value with the comperand (third parameter) value. If the destination value is equal to the comperand value, the exchange value is stored in the address specified by destination, otherwise no operation is performed. The variables for InterlockedCompareExchange() must be aligned on a 32-bit boundary; otherwise, this function will fail on multiprocessor x86 systems and any non-x86 systems. This function and all other functions of the InterlockedExchange() and InterlockedExchange64() family should not be used on memory allocated with the PAGE_NOCACHE modifier because this may cause hardware faults on some processor architectures. To ensure ordering between reads and writes to PAGE_NOCACHE memory, use explicit memory barriers in your code.

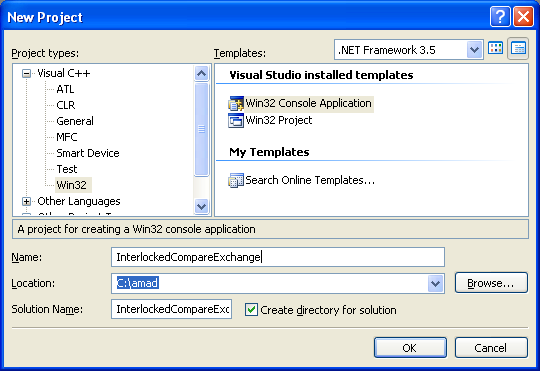

Create a new empty Win32 console application project. Give a suitable project name and change the project location if needed.

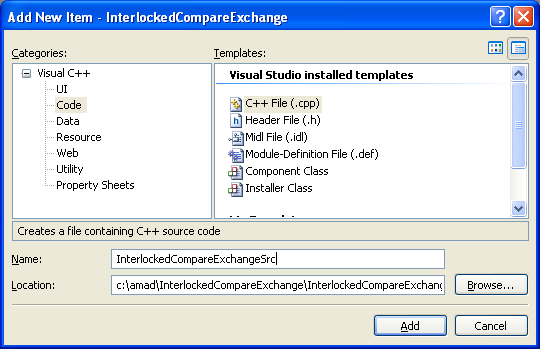

Then, add the source file and give it a suitable name.

Next, add the following source code.

#include <Windows.h>

#include <stdio.h>

// Shared global variables

LONG run_now = 1;

WCHAR message[] = LHello I'm a Thread;

DWORD WINAPI thread_function(LPVOID arg)

{

int count2;

wprintf(Lthread_function() is running. Argument sent was: %s\n, arg);

wprintf(LEntering child loop...\n);

wprintf(L\n);

for (count2 = 0; count2 < 10; count2++)

{

if (InterlockedCompareExchange(&run_now, 1, 2) == 2)

wprintf(LX-2 );

else

Sleep(1000);

}

Sleep(2000);

return 0;

}

void wmain()

{

HANDLE a_thread;

DWORD a_threadId;

DWORD thread_result;

int count1;

wprintf(LThe process ID is %u\n, GetCurrentProcessId());

wprintf(LThe main() thread ID is %u\n, GetCurrentThreadId());

wprintf(L\n);

// Create a new thread.

a_thread = CreateThread(NULL, 0, thread_function, (PVOID)message, 0,&a_threadId);

if (a_thread == NULL)

{

wprintf(LCreateThread() - Thread creation failed, error %u\n, GetLastError());

exit(EXIT_FAILURE);

}

else

wprintf(LCreateThread() is OK, thread ID is %u\n, a_threadId);

wprintf(LEntering main() loop...\n);

for (count1 = 0; count1 < 10; count1++)

{

// The function returns the initial value of the Destination (parameter 1).

if (InterlockedCompareExchange(&run_now, 2, 1) == 1)

wprintf(LY-1);

else

Sleep(3000);

}

wprintf(L\n);

wprintf(L\nWaiting for thread %u to finish...\n, a_threadId);

if (WaitForSingleObject(a_thread, INFINITE) != WAIT_OBJECT_0)

{

wprintf(LThread join failed! Error %u, GetLastError());

exit(EXIT_FAILURE);

}

else

wprintf(LWaitForSingleObject() is OK, an object was signalled...\n);

// Retrieve the code returned by the thread.

if(GetExitCodeThread(a_thread, &thread_result) != 0)

wprintf(LGetExitCodeThread() is OK! Thread joined, exit code %u\n, thread_result);

else

wprintf(LGetExitCodeThread() failed, error %u\n, GetLastError());

if(CloseHandle(a_thread) != 0)

wprintf(La_thread handle was closed successfully!\n);

else

wprintf(LFailed to close a_thread handle, error %u\n, GetLastError());

exit(EXIT_SUCCESS);

}

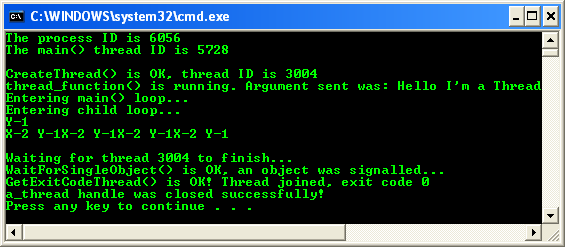

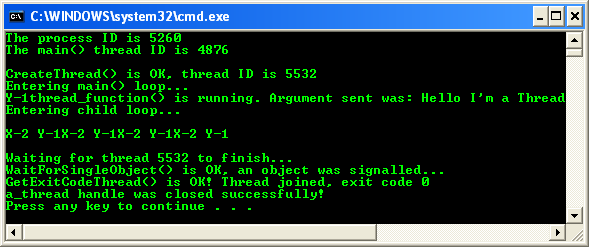

Build and run the project. The following are the sample outputs when the program was run many times.

Spinlocks work well for synchronizing access to a single object, but most applications are not this simple. Moreover, using spinlocks is not the most efficient means for controlling access to a shared resource. Running a While loop in the user mode while waiting for a global value to change wastes CPU cycles unnecessarily. A mechanism is needed that does not waste CPU time while waiting to access a shared resource. When a thread requires access to a shared resource (for example, a shared memory object), it must either be notified or scheduled to resume execution. To accomplish this, a thread must call an operating system function, passing parameters to it that indicate what the thread is waiting for. If the operating system detects that the resource is available, the function returns and the thread resumes. If the resource is unavailable, the system places the thread in a wait state, making the thread non-schedulable. This prevents the thread from wasting any CPU time. When a thread is waiting, the system permits the exchange of information between the thread and the resource. The operating system tracks the resources that a thread needs and automatically resumes the thread when the resource becomes available. The execution of the thread is synchronized with the availability of the resource. Mechanisms that prevent the thread from wasting CPU time include:

- Mutexes

- Critical sections

- Semaphores

Windows includes all three of these mechanisms, and UNIX provides both semaphores and mutexes. These three mechanisms are described in the following sections.

< Thread Synchronization 41 | Thread Synchronization Programming | Win32 Programming | Thread Synchronization 43 >